ECS175: COMPUTER GRAPHICS

INSTRUCTOR: Kwan-Liu Ma

CLASS TIMES: MWF, 2:10-3:00 PM, Olson 146

PROJECTS

PROJECT 0: Setting It Up (due 11:59pm, October 10)

Welcome to ECS 175 Introduction to Computer Graphics. In this course you will learn about the fundamentals of the 3D Graphics Pipeline.

But, first we need to begin with the basics and build up the projects step by step. In order to do so we need to prepare and set up all the infrastructure needed to be able to compile and execute your program within the selected programming environment. This special project is designed to get you ready for the four programming assignments. It will not be graded. You should make sure you properly set up your work environment by following the following steps so you can hit the ground running on Project 1.

Throughout this course you will use two core libraries:

1. Qt5: https://qt-project.org/

2. OpenGL: http://www.opengl.org/

Step 1: Get the code project0

from ~cs175/Public on CSIF

Step 2: Compile the p0.pro Qt project file using qmake

First ensure you have the proper qmake with: qmake --version (should print Qt Version 5.x.x)

Then create a directory called p0-build and cd into it and run the following:

1. qmake ../project0/p0.pro (on CSIF, do "qmake-qt5 ../project0/p0.pro" instead)

2. make

3. run the code (for help add -h):

- ./p0 -d simple

- ./p0 -d signals_and_slots

- ./p0 -d uidemo

- ./p0 -d opengl (You should see a triangle in the display window.)

Step 3: Extend this OpenGL code as follows:

1. Create a user interface to modify the display window's background color and also the triangle's colorIf everything works, you are ready to do Project 1 :)

2. Print (x,y) location when right mouse button is clicked (look at QMessageBox)

3. Finally, create a simple functioning calculator demo application using QtDesigner

We also suggest you to:

- Learn about Version Control Systems (e.g., Git, SVN, or Mercurial)

- Learn about Doxygen

- Learn about gDebugger

PROJECT 1: A Basic Viewer (due 11:59pm, October 23)

Your first project is the implementation of a basic 3D viewer in OpenGL.

Let's break the project down into smaller tasks. The viewer must read in the description of a 3D scene from a file that contains the definition, position, and orientation of several models. Each model is described using a well known and simple format called Wavefront OBJ (http://en.wikipedia.org/wiki/Wavefront_.obj_file). We provide you a parser code for reading OBJ files. Find it in ~cs175/Public on CSIF. While a model file is being read in and parsed, your program should store the model in memory using an internal representation (i.e., a data structure) that you design. Once the program has an internal representation of the model, it should be able to display an image of the model. This is where OpenGL comes in. OpenGL is a platform independent software interface to graphics hardware. It is the library with drawing routines, it sets color, fills polygons, texture maps, etc. You will create a scene with multiple models. Through the viewer, you should be able to manage the scene by manipulating each model. Eventually, the scene will include advanced options such as light sources. A simple scene parser is provided, and you can also find it in ~cs175/Public.

But OpenGL alone cannot create a window to display the scene, and for that we will use Qt. Once you have a window with an image of the scene, you'll need to create a nice interactive user interface for navigating in and manipulating the scene. The Qt library will be used to deal with interactions as well as to create controls such as buttons, checkboxes, lists, etc. After finishing this project, you should have a grasp of basic OpenGL and UI toolkits which you can build on in ECS 175 and beyond to develop scientific visualization programs, CAD/CAM software, and computer games.

Using OpenGL allows you to leverage the power of graphics hardware, GPU. With this project, you will get a sense of how fast hardware accelerated rendering can be. In later projects, you will write software routines to replace OpenGL calls for scan conversion, clipping, shading, texturing, etc. In this way, you will learn the details of how rendering is actually done.

- Tasks:

- [30%] Create a single 3D model (e.g., a cube, cone, or sphere) by hand or using a tool, and display it in your viewer using orthographic projection and flat-shading. Note that you will need to set up frames and transformation matrices for this model and other ones.

- [20%] Make a simple user interface through which you can translate, scale, and rotate the model, a single object, using a mouse

- [10%] Toggle the display between flat-shaded and wireframe rendering, and also between orthographic projection and perspective projection

- [10%] Construct more complex models such as desks or chairs from the simple ones. You may notice that even very simple shapes are tedious and time consuming to make by hand.

- [15%] Construct, load, render, and navigate a scene (e.g., a classroom) consisting of multiple models (e.g., desks and chairs) made by you or taken from the Web. Be creative and have fun. You should be able to translate, scale, and roate the whole scene using a mouse. Feel free to use example scene loader provided in Project 0.

- [15%] Expand the user interface so you can select any object in the scene to modify its size, position, and shape. The selection may be done by simply choosing from the list of objects in the scene rather than direct 3D picking. You should be able to save the current scene into a file so later you can restore the scene.

If you would use code and/or models from the Web, plesae declare.

Extra credit is possible if a significantly extra effort is demonstrated.

- Other considerations:

- If you write a parser yourself for other model formats like VTK or MDL, you will then be able to use the huge number of models available online.

PROJECT 2: Rasterization (due 11:59pm, November 9)

In Project 1 you have seen the power of OpenGL. In this project you will delve into the core rendering pipeline. The goal is to take you from reading geometry to rendering the final image. You will replicate the same functionalities from the previous assignment in software. This assignment covers transformations, culling, clipping, projection, rasterization, and creating simple shaders.

To simplify things, you will only be asked to process triangles. For the end result you will need to create an RGBA buffer and render the final image as an image. Instead of using OpenGL constructs such as vertex buffer objects to provide a rendering storage infrastructure, you will directly use any CPU-based data-structures to hold the vertex, color, and index list. The software-based vertex shader is then called on each vertex and the result from the shader execution is then stored for further processing by the next stage of your software OpenGL pipeline. Once all vertices are processed, the projected points (x, y, z, w) then goes through view transformation, culling, clipping, perspective divide, and then rasterization.

During the rasterization, each triangle is scan converted into pixels, then a software fragment shader is called to process each pixel. A depth value is associated with each pixel, and testing the depth value against the z-buffer determines whether the pixel should be drawn or not depending on whether it is closer to the camera than previous pixel.

The final image after all the pixels are processed should be rendered side-by-side with the OpenGL solution from Project 1.

Additional detail: The software-based vertex shader needs to be able to perform simple model-view-project matrix multiplication as shown and the fragment shader should simply return the final color. In Qt, it is possible to implement the software vertex shader using QtScript. The final image can be created and shown using a QImage or QPixmap image rendered within a QWidget.

Tasks:

- [ 5%] Create a Z-buffer for performing depth test and a Color Buffer for the final scene and implement the glClear() operation.

- [ 5%] Store position, color, and indices in a software buffer.

- [10%] Process buffer through a software vertex shader to apply Model, View, and Projection transformations per vertex.

- [20%] Perform culling, clipping, divide by w and view transform.

- [20%] Rasterize the triangle into the color buffer and interpolate position and color.

- [10%] Invoke fragment shader for each pixel.

- [10%] Update the pixel if the new z value is smaller than what is stored in the z buffer

- [10%] Display the color buffer on screen and compare against OpenGL implementation.

You could get extra credits by adding transparency to your scene.

PROJECT 3: Lighting and Shading (due 11:59pm, November 25)

In this project, you are asked to extend your 3D viewer to achieve more realistic rendering. You are to implement functions handling a little more sophisticated lighting and shading of polygons than the flat shading you have used so far. The required algorithms are Gouraud shading and Phong shading using the Phong illumination model.

You will implement the shading methods in the shader program using GLSL. The values of all the lighting parameters should be modifiable through an interactive user interface so you can experiment with different lighting effects and material types.

Tasks:

- 25% - implement Gouraud shading using the complete Phong illumination model

- 15% - implement Phong shading

- 10% - support multiple light sources

- 15% - allow the user to toggle between flat shading, Gouraud shading,and Phong shading

- 10% - implement point and direction light sources and allow user to toggle between them for each light source

- 15% - allow the user to interactively change material properties for each object/model (Ka, Kd, Ks) and save the new setting to the model file.

- 10% - allow user to interactively change properties of each light source (e.g., light intensity, position, direction, color, etc.) and save the new setting to the model/scene-graph file.

You are encouraged to implement additional features such as A-buffer to handle transparency.

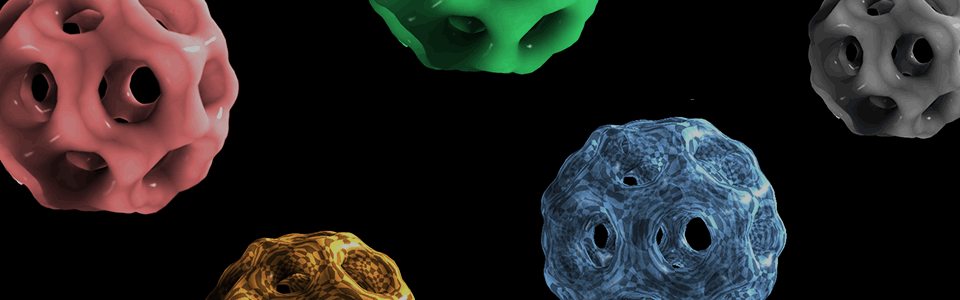

PROJECT 4: Texture Mapping (due 11:59pm, December 15)

The quarter is almost over, but the graphics fun has just begun! Your images will really pop when you spruce them up with some textures. We hope after this final project, you will be even more fascinated by the power of computer graphics. You can now create more realistic objects and worlds which were only in your imagination.

In this project, you will implement some of the 2-d texture mapping methods in OpenGL using the graphics card.

Texture maps are rectangular arrays of color values used to modify the final colors of generated primitives on a per-pixel basis. Texture coordinates are normalized, such that a coordinate (0, 0) always maps to the lower-left corner of the current texture image; (1, 1) always maps to the upper-right corner of the current texture image, regardless of its size.

- Texture coordinate clamping: all coordinates smaller than zero are clamped to zero, and all coordinates larger than one are clamped to one.

- Texture coordinate repeating: a coordinate x is wrapped to the coordinate x - floor(x), thereby "tiling" the texture across the primitive.

After the interpolated texture coordinates have been wrapped to the

interval [0, 1] using one of the above methods, they are scaled to the

sizes of the current texture image, and the texture value is computed.

There are two different methods to compute a texture value:

- Nearest-neighbor sampling: The value of the texture map entry ("texel") closest to the computed texture coordinate is returned.

- Bi-linear interpolation: The texture value is computed by looking up the four texels surrounding the computed texture coordinate, and averaging their values using bi-linear interpolation.

Tasks:

- 20% - Apply texture to a rectangle/quad object (e.g., wall, floor)

- 40% - Make box mapping (10%), cylinder mapping (10%), sphere mapping (10%), and UV mapping (10%) with appropriate UI to choose

- 10% - Be able to switch among different texture wrap modes (repeat, mirrored repeat, clamp to edge, and clamp to border) with appropriate UI

- 5% - Be able to switch between nearest filtering and linear filtering with appropriate UI

- 15% - Implement bump mapping for box mapping (5%), cylinder mapping (5%), and sphere apping (5%)

- 10% - Implement bump mapping for UV mapping

Extra Credit:

- 10% - Add animated textures